Skip to content

Insights

How Data is Unlocking Capacity in Distribution Networks

Insights

Energy

8 Signs you need to upgrade your monitoring strategy

Insights

Energy

How hybrid wind-solar farms are maximising renewable energy output

Insights

Energy

AI demand is redefining data centre resilience

Insights

In a grid transforming faster than its models, the greatest risk is the one utility cannot see…

Insights

Energy

PRESense | Enabling a smarter network with load indices

Insights

Energy

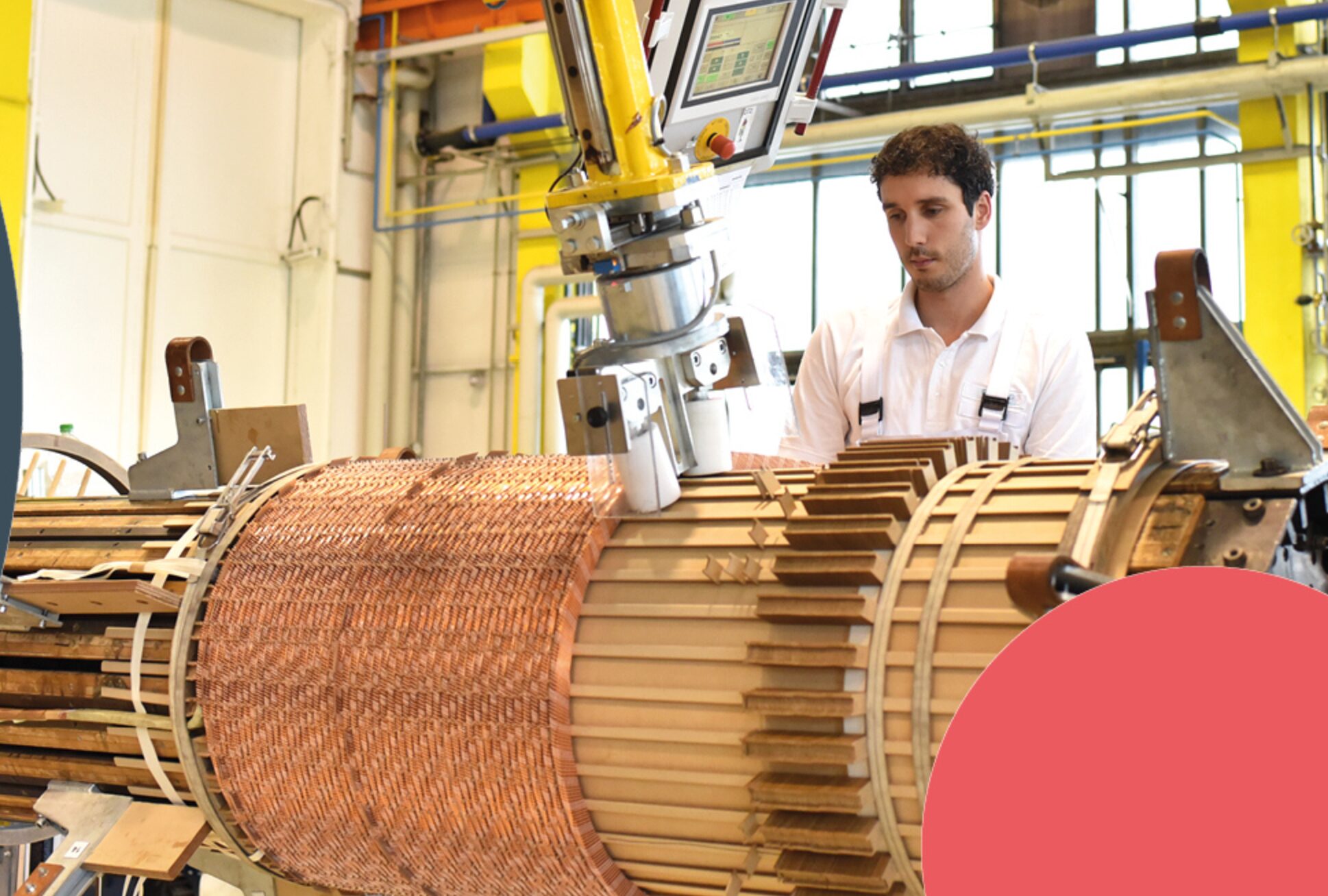

Transformer Insights: The key components in a transformer and why they fail

Insights

Energy

Transformer lead times: Why it matters to renewables energy producers

Insights

Energy

Electricity North West targets improved safety and resilience with LineSIGHT